Dr. Julius Neubronner’s Miniature Pigeon Camera

Read Moreblogject

Attaching stories to objects: the return of the blogject meme

This article in the NYT made me think that there seems to be a new bubble in platforms that allows attaching stories to physical objects. The article mentions Tales of things (" Slap on a sticker with a newfangled bar code, and anybody with a properly equipped smartphone can scan the object and learn..."), Itizen ("a tell-and-tag approach") and Sticky Bits (" can also be used to link content to an existing bar code").

Some excerpts I found interesting:

"Goldstein theorizes that the motive was the same “microboredom” that inclines users of mobile check-in apps to announce that they’ve arrived at Chili’s — except that users could broadcast not just where they were but also what objects were around them. Some do use StickyBits to communicate something specific to people they know, but many essentially use it as a media platform. (...) Under that scenario, things are being linked to a story not so much in the form of narrative as of cumulative data. The continuum moves even further in the direction of raw information when you consider what tech experts call the “Internet of things” — more and more stuff produced with sensors and tags and emitting readable data (...) As more objects have more to say, the question becomes what we want to hear, and from what."

Curiously, I haven't seen any mention of Thinglink, which was one of the first platform to propose a globally unique object identifier. Developed by Social Objects Oy, a company founded by Ulla-Maaria Mutanen and Jyri Engeström, it's now a platform where the thinglink object code is linked to further references, such as photos, descriptive text, tags, maker(s), owner(s), and web links.

Why do I blog this? It's been a while that I follow this trend and it's funny so much activity along these lines. Possibly some interesting things to be discussed at Lift11. What I am curious about is how this is connected to blogjects and how things have changed in the last few years.

Networked objects 2010

An update for myself. Various networked objects that I've ran across recently and that seems to be curious for my projects:

Analogue Tape Glove (Signal to Noise)

Analogue Tape Glove (Signal to Noise)

"This interactive sound installation deals with "exploring the physical connection between people and technology". A tangible user interface is provided in the form of a glove, worn by the participant as they are invited to interact with an analogue tape surface. As the glove comes in contact with the tape, sound is generated and can be manipulated via touch and movement. The pre-recorded sound on the tape is a random collage of compiled material including a range of musical styles & found recordings. According to its creators, the work “explores the somewhat obsolete medium of tape through a playful and sonically interesting experience."

Daily Stack (sebastian rønde thielke and anders højmose)

Daily Stack (sebastian rønde thielke and anders højmose)

"The simple design allows users to help track their work flow by creating physical representations of their tasks. The design consists of a small base and a series of wood blocks that each have a different colour and shape. Each colour represents a different task and the time interval is determined by the size of the block. The user stacks their tasks on the base, committing to them. the base contains electronics that communicate with a computer, tracking time and tasks in progress digitally. The user can even go back through their archive and look at previous stacks. the design helps the user better visualize their time, helping them make the most of it."

"Slurp is tangible interface for manipulating abstract digital information as if it were water. Taking the form of an eyedropper, Slurp can extract (slurp up) and inject (squirt out) pointers to digital objects. We have created Slurp to explore the use of physical metaphor, feedback, and affordances in tangible interface design when working with abstract digital media types. Our goal is to privilege spatial relationships between devices and people while providing new physical manipulation techniques for ubiquitous computing environments."

Kokonatchi / ココナッチ (University of Tokyo and Waseda):

Kokonatchi / ココナッチ (University of Tokyo and Waseda):

" Looking something like a hybrid stress ball and giant butter bean, Kokonatchi connects to your computer via a USB lead, sits on your desk, wiggles and lights up when a new tweet enters your account feed. It contains RGB LEDs which change color according to the context or ‘emotion’ of the tweet, and vibrates or ‘shivers’ when it is scared"

Olars (Lars Marcus Vedeler)

Olars (Lars Marcus Vedeler)

"Olars is an electronic interactive toy inspired by Karl Sims' evolved virtual creatures. Having thousands of varieties in movement and behaviour by attaching different geometrical limbs, modifying the angle of these, twisting the body itself, and by adjusting the deflection of the motorised joints, results in both familiar and strange motion patterns."

"OnObject is a small device user wears on hand to program physical objects to respond to gestural triggers. Attach an RFID tag to any objects, grab them by the tag, and program their responses to your grab, release, shake, swing, and thrust gestures using built in microphone or on-screen interface. Using OnObject, children, parents, teachers and end users can instantly create gestural object interfaces and enjoy them. Copy-paste the programming from one object to another to propagate the interactivity in your environment."

Species (Theo Tveterås and Synne Frydenberg)

Species (Theo Tveterås and Synne Frydenberg)

"Interactive toy that tunes in on bacteria frequency and amplifies it."

Why do I blog this? a kind of messy list but it's sometimes good to collect curious projects and see how they compare to what has been done in the past. Some interesting new trends ahead in terms of interactions: augmentation by other channels than visual representation, new forms of object connectivity (slurp), the importance of original material (wood, textiles). It's not necessary brand new in 2010 but what's curious is that the implementation and the usage scenario are intriguing and beyond classical utilitarian ideas.

Networked objects session at Lift Asia 2009

The "networked objects" session at Lift Asia 09 was a good moment with three insightful speakers: Rafi Haladjian (in transition from Violet to his new company called sen.se), Adrian David Cheok (from the Mixed Reality Lab in Singapore and Keio University) and Hojun Song. My notes from the session hereafter.

In his presentation entitled "Demystifying the Internet of Things", Rafi Haladjian shared his perspectives on the Internet of Things. Starting from his own experience with the Nabaztag and other Violet products, he made of point of adopting a down to earth approach to the Internet of Things. Based on a analysis of the Darwinian evolution of devices and connectivity, he gave examples such as the Teddy Bear (which went from the basic version to the talking bear (because the maker needs to recreate value and then new products) and finally new toys with rfid now that we have cheap technologies. He also took the example of the scale (mechanical bathroom scales - digital scale - wifi bathroom scale).

He then highlighted "a raw and cynical definition of the IoT":

- The expansion of the internet to any type of physical device, artifact or space. Which is not a decision but something that is happening "organically" because of the availability of cheap communication technologies

- The product of decentralized loosely joint decisions

- Something that will be technology and application-agnostic

This three characteristics led him to pave the way for possible evolution of the IoT. To do this, he stated how it is important to look at past experiences. The mechanical typewriter (one purpose) evolved into the word processor (a computer that could only be used to type in text) AND into another branch: the personal computer (multi-purpose, not just a word processor) that then took the form of laptops or netbooks (with an infinite number of applications). If we look back to things such as bathroom scales, now that we have ICT in there, the wifi scale does the same job as a scale only better but it can be done other things differently (send recommendation, update doctor, personalize the gym equipment, make the information completely social, games with prizes and promotions, organize strikes!). As he explained, this sounds weird, but is it so different than the iphone? The iphone showed that you can have a device and let third-parties make applications and you do not need to bother what should be done for this device.

According to him, the IoT change the way devices should act in the following ways:

- From one purpose to a bundle of sensors and output capabilities designed for a context

- Leads to application agnostic open to third party

- Most probably you will not be able to create new types of devices: it's easier to piggyback on existing devices and use habits (that people are familiar with)

- You must be economically realistic, you cannot turn a device into an iphone, you must solve the cost/price/performance issue

In addition, such a system helps solving what he called the "Data Fishbowl" effect: today all our data are like fished in a fishbowl and there is just one spot in our environment where the information are: the computer. The IoT has the ambition to have vaporize information... like butterflies, or, more simply, like post-it notes. It's about putting the information in context.

He concluded by saying that the purpose is to go from a world where with have a handful of single-purpose devices to give sense to everything: which is what Rafi is going to be doing in my next company: sen.se

(Poultry Internet)

(Poultry Internet)

The second presentation by Adrian-David Cheok was called "Embodied Media and Mixed Reality for Social and Physical Interactive". It outlined new facilities within human media spaces supporting embodied interaction between humans, animals, and computation both socially and physically, with the aim of novel interactive communication and entertainment. Adrian and his team indeed aim to develop new types of human communications and entertainment environments which can increase support for multi-person multi-modal interaction and remote presence.

Adrian's presentation consisted in a series of ubiquitous computing environment based on an integrated design of real and virtual worlds that aims to be an "alternative" to existing systems. His examples aimed at revealing the paradigm shift in interaction design: it's not "just" sharing information but also experiences.

He started from the well-known examples he worked out at his lab with Human Pacman (Pacmen and Ghosts are now real human players in the real-world experiencing mixed computer graphics fantasy-reality) or the hugging pajamas (remote-controlled pajama that could be hugged through the internet). He then moved to "human-pet interaction systems":

- Poultry Internet: remote petting through the internet (red door / blue door to test pet preference to interactions & objects)

- Metazoa Ludens, that allows to play a computer-game with a pet: the human user controls an avatar which corresponds to a moving bait that an hamster tries to catch. The movement of the animal in the real world are translated in the digital environment and the pet avatar chases the avatar controlled by the human)

He finally spent the last part of his presentation dealing with "Empathetic living media", a new form of media that follows two purposes: (1) To inform: Ambient living media promotes human empathy, social and organic happenings around a person’s life, (2) To represent: Living organisms representing significant portions of one’s life adds semantics to the manifestation. Examples corresponded to glowing bacteria (Escherichia coli) or the curious Babbage Cabbage System:

"Babbage Cabbage is a new form of empathetic living media used to communicate social or ecological information in the form of a living slow media feedback display. In the fast paced modern world people are generally too busy to monitor various significant social or human aspects of their lives, such as time spent with their family, their overall health, state of the ecology, etc. By quantifying such information digitally, information is coupled into living plants, providing a media that connects with the user in a way that traditional electronic digital media can not. An impedance match is made to couple important information in the world with the output media, relating these issues to the color changing properties of the living red cabbage."

(Babbage Cabbage)

(Babbage Cabbage)

In his conclusion, Adrian tried to foresee potential vectors along these lines:

- Radically new and emotionally powerful biological media yielding symbiotic relationships in the new ubiquitous media frontier

- Plants which move: Animated display system, plants as sensors

- Ant-based display system

- Cuttlefish Phone

The third presenter in the session, korean artist Hojun Song, showed a quick description of his current project: the design and crafting of an DIY/open source satellite. He went through the different steps of his project (design rationale, funding, technical implementation) to show an interesting and concrete implementation of a networked object. Concluding with a set of potential issues and risks, he asked participants for help and contributions.

Wembley moustraps as blogjects

It seems that mousetraps too can become blogjects:

"[Rentokil] added a small sensor and a wireless module to its traps so that they notify the building staff when a rodent is caught. This is a big improvement on traps that need to be regularly inspected. A large building might contain hundreds of them, and a few are bound to be forgotten.Since June 2006 thousands of digital mousetraps have been put in big buildings and venues such as London's new Wembley Stadium. The traps communicate with central hubs that connect to the internet via the mobile network to alert staff if a creature is caught. The system provides a wealth of information. The data it collects and analyses on when and where rodents are caught enable building managers to place traps more effectively and alert them to a new outbreak. "

Why do I blog this? a basic example of a curious machine-to-machine communication involving animals.

Internet FOR things

In his graduation thesis entitled "Social RFID, at the Utrecht School of the Arts, Patrick Plaggenborg interestingly explores what an "Internet FOR Things" mean, differentiated from the so-called "Internet of Things". The document can be downloaded here.

In his graduation thesis entitled "Social RFID, at the Utrecht School of the Arts, Patrick Plaggenborg interestingly explores what an "Internet FOR Things" mean, differentiated from the so-called "Internet of Things". The document can be downloaded here.

The goal of the project is to explore supply chain RFID infrastructure to form a public platform and "reveal the invisible emotions in things" so that "people are stimulated to look at objects differently", especially those seemingly worthless objects.

More than the project itself, I was intrigued by the "internet for things" notion and its implication that Patrick defines as follows:

"A world with all objects being tagged and uniquely identified is still not very close, but we can think of scenarios and applications for it. The infrastructure will be rolled out slowly, starting with the bigger and more expensive items. In the mean time designers can speed up this process with Thinglinks and their own RFID tags to create test beds for their own interest. Using this infrastructure, small applications will take off as forerunners to a world where digital interaction with every day objects will be common. This is not the ‘Internet Of Things’, where objects connect to create smart environments and where they collect and exchanging data with sensors. This is about the ‘Internet For Things’""

Why do I blog this? What I find intriguing here is the parallel wave of design research concerning the Internet of Things which seems to me far beyond the current vector pursued by lots of research labs in the domain. Combined with blogjects, thinglinks and relevant interfaces there is a strong potential for these ideas.

To some extent, I am curious about how the new Nokia research lab in Lausanne is interested in this sort of explorations.

"Deer Blogger"/"Deer Tracking via e-mail"

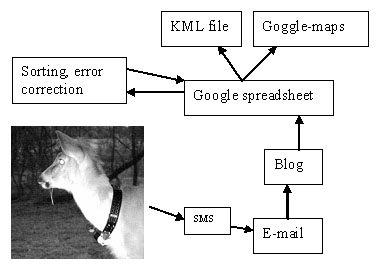

Via, geolocated animals are now more and more common (after pigeon blogs back in 2006), the most recent example is this deer named Thor that blog his own position on Google Earth. What is interesting in this case is not the animal blogging meme (although it's yet another example) but the underlying process: a "Mail to Map/SMS-to-Map/Mail to Google Earth" process as described by the developer:

Also intriguing this "importance notice" by the guy who set up this whole thing:

"Important notice: since the displayed coordinates belongs to a live animal (currently a white-tailed Deer nicknamed Thor), by reading this page and looking at the map you are accepting terms of agreement: “do not harm the animal in anyway’. Actually it lives on private grounds or a preserve territory anyway."

Yes, people, remember: although it looks like a virtual environment, it's a real deer.

Why do I blog this? I like the "blogject tracking" via email dimension here.

A taxonomy of "coded domestic objects":

In Software, Objects and Home Space, Martin Dodge and Rob Kitchin (Environment and Planning A) examine the relationship between objects and software in detail. They describe how ubiquitous computing - through the embedding of sensors and computation in objects - is transforming daily artifacts, giving them new capacities. To do so, they came up with an interesting taxonomy of "coded domestic objects":

"Coded objects can be subdivided into two broad classes based on their relational capacities. First, there are unitary objects that rely on code to function but do not record their work in the world. Second, there are objects that have an ‘awareness’ of themselves and their relations with the world and which, by default, automatically record aspects of those relations in logs that are stored and re-used in the future (that we call logjects [an object that monitors and records in some fashion its own use]). (...) In broad terms unitary coded objects can be divided into those that function independently of their surroundings and those that are equipped with some kind of sensors that enable the object to react meaningfully to particular variables in their immediate environment. (...) We can identify two main classes of logject: impermeable and permeable. Impermeable logjects consist of relatively self-contained units such as a MP3 player, a PDA or satnav. Such devices trace and track their usage by default, recording this data as an embedded history; are programmable in terms of configurable settings and creating lists (e.g. play lists of songs, diary entries and route itineraries); perform operations in automated, automatic and autonomous ways; and engender socially meaningful acts such as entertaining, remember an important meeting and helping not to get lost. (...) Permeable logjects do not function without continuous access to other technologies and networks. In particular, because they need the constant two-way of data exchanges, they are reliant on access to a distributed communication network to perform their primary function. Such logjects track, trace and record their usage locally but because of memory issues, the necessity of service monitoring/billing, and in some cases a user’s ability to erase or reprogram such objects, their full histories are also recorded externally to its immediate material form"

And about how these coded objects make "home differently":

"the everyday use of coded objects reshapes the spatiality of the home by altering how domestic tasks are undertaken (and not always more conveniently for all), introducing new tasks and sometimes greater complexity, and embedding the home in diverse, extended networks of consumption and governmentality. (...) the transition into the fully software-enabled home is a slow process. Most homes contain a mix of non-coded and coded technologies. (...) a useful parallel can be drawn between the coding of homes and the initial development of domestic electricity. At first, there were no electrical appliances and whole classes of electrical tools had to be invented. Over an extended period of time existing technologies were converted to electricity (e.g. gas lights to electric lights, open hearth to electric cooker, washtub to washing machine, etc.). Today, the extent to which electricity powers almost everything of significance in our homes is largely unnoticed in a Western context (except in a power cut). "

Why do I blog this? The taxonomy of objects is relevant as it shows the sort of "current design space", mapping the different possibilities depending on the coded behavior. Moreover, the thing I like with these authors is that their reading of ubicomp is definitely more about the "messily arranged here-and-now" and less about the "supposed smart home of the future". Surely some material to reflect on in current writings with Julian, especially about the relationships between technologies and spatial bevahior/materialities.

Text clothes

Back during the first internet bubble, mobile computing was already a hot thing and people start having ideas about how to connect things and people. One of them was Skim which enabled a sort of physical to digital connection through a identification number written a piece of clothes that you can text or email. To some extent, it's about sending a note to your I.D. number that will be forwarded to your skim.com e-mail address. Your t-shirt could tell others how to get in touch with you BUT they won't know you're real identity.

The whole process is summarized here:

"There is a "unique mailbox number" on every fashion piece. It is six figures long. On the T-shirts it is on the sleeve, on the jackets it is on the pocket etc. In the packaging of the product there is a card with an "access code" on it. Together the "unique mailbox number" and the "access code" give you access to the world of skim.com. Your skim.com mail account is now "unique mailbox number"@skim.com. This is yours forever. It is private to you. See our privacy promise. You can give the email out to your friends, collegues, dates etc. To help you, some of our products come with business cards with your special number on it. To check your mail, simply log on to the skim.com website, and go to the communications section. Then it is simple: enter your "unique mailbox number" and your "access code" and you can check/send mail."

Why do I blog this? looking for service failures for my "tech failure" project. This skim.com thing is interesting in itself but obviously failed for some reasons (I'd be glad to know more about them). I guess the project was also an enabler of social comparison ("you have it, you're part of that community")

It's also important to note the perpetuation of such ideas since reactee is a create-your-own-tshirt platform that also allows to display a code on the tshirt (to txt the person who wears it).

Thanks Luc!

Beat wartime empathy device

Look at this beat wartime empathy device by Dominic Muren. As he explained me in his email:

"Though it's not the most traditional interface design, I feel more and more that really functional interfaces in our world of mediation, will need to be physical. And what more complicated topic to give physicality than war, and the civilian relationship to it. The Beat wartime empathy device is actually a pair of dogtag-like receiver and transmitter, one worn by a soldier, and the other anonymously "adopted" by a civilian. The soldier's heartbeat is recorded, and transmitted, real time, to the civilian, where it is physically thumped against their chest, another heartbeat next to theirs. They feel the soldier's fear, calm, or, god forbid, death. With such an intimate connection, it takes a hard heart indeed to ignore the true cost of war."

Why do I blog this? an intriguing project about mediating physiological cues. I like the idea of a very simple sign (heart beat) being conveyed to connect people.

Web presence for people, places, Things

In the paper, People, Places, Things: Web Presence for the Real World, researchers at HP described in 2008 how to support "web presence" for people, place and things. It actually refers to the Cooltown project, which has been conducted 5-6 years ago. Some elements from the paper:

"We put web servers into things like printers and put information into web servers about things like artwork; we group physically related things into places embodied in web servers. Using URLs for addressing, physical URL beaconing and sensing of URLs for discovery, and localized web servers for directories, we can create a location-aware but ubiquitous system to support nomadic users. On top of this infrastructure we can leverage Internet connectivity to support communications services."

Why do I blog this? Although the project was more about supporting communication services and providing nomadic users with an access to object/information without a central control point, I was interested in that from another perspective: the agency of artifacts. Beyond their potential accessibility through the Internet, what does that mean when my watch, my lamp or even toilets have a web presence? This aspect is not that addressed in the paper and more obviously connects with the near future laboratory interest in blogjects.

Reference: Kindberg, T., Barton, J., Morgan, J., Becker, G., Caswell, D., Debaty, P., Gopal, G., Frid, M., Krishnan, V. Morris, H., Schettino, J., Serra, B. & Spasojevic, M. (2002). People, Places, Things: Web Presence for the Real World, Mobile Computing Systems and Applications, 2000 Third IEEE Workshop on, pp. 19-28.

Affective body memorabilia

Via Frédéric: Affective Diary is a project conducted by SICS and Microsoft Research by Kristina Höök, Martin Svensson, Anna Ståhl, Petra Sundström and Jarmo Laaksolathi, SICS, Marco Combetto, Alex Taylor and Richard Harper, Microsoft Research. This diary is based on a “affective body memorabilia” concept since it captures some of the physical, bodily aspects of experiences and emotions of the owner through body sensors, (and uploaded via mobile phone). Eventually, it forms "an ambiguous, abstract colourful body shape". These shapes can be made available to others and "the diary is designed to invite reflection and to allow the user to piece together their own stories.

The usage scenario is described as follows:

"In Affective Diary users carry bio sensors that capture for example movement, skin temperature, galvanic skin response and pulse. Activities on the mobile phone is also captured, sent and received SMSs, Bluetooth presence, photos taken with the camera and music that you have listened to during the use. The user carries the sensors, for example, during a day, in the evening at home the data is downloaded into a tablet PC and the user’s day is represented in Affective Diary as an animation, where the data is shown. The bio data is visualised as characters with different body postures and colours, representing movement and arousal. The data from the mobile phone is graphically represented and clicking on the different representations makes it possible to read SMS-conversations, view other Bluetooth units and photographs and hear the music again. There is also the possibility to scribble in the diary and the characters representing bio data can be changed, both the body posture and the colour. The Affective Diary aims at letting users relive both the physical parts of their experiences as well as the cognitive parts."

Why do I blog this? what I find intriguing (and new) here is that, compared to existing projects (such as Bio-Mapping by Christian Nold) the "history" feature is taken into account (as shown maybe by the picture above). In a sense, it's interesting to think about how an history of physicological reactions/emotions can be turned into a design object. This is not only interesting in mobile context but also in terms of objects interactions. How would an object register emotional content. Thinking about Ulla-Maaria's project, it would be curious to add this emotional component (especially about object created by oneself)

Personal Progress Management (PPM)

MyProgress (Via Techcrunch) is a interesting service that provides different "Personal Progress Management (PPM) tools". It automatically observe and analyze all essential aspects of your life. With MyProgress, you can watch your progress and discover your productivity

"MyProgress is a Web 2.0 service aimed to bring progress monitoring features (generally used in computer RPGs) into real lives. It is a family of services we called Personal Progress Management. With MyProgress, users can track their personal finances, skills, knowledge, wealth and health dynamics and figure their place in the real word. (...) The core feature of MyProgress is its intelligence: smart technologies track every piece of information users enter, whether it would be a new purchase, capital gains, an hour of photographic or driving experience, rental price change, etc., and provide detailed overview on who these people are and how fast they are progressing in comparison with the others across multiple categories, such as age, occupation, and location. Intelligence squeezes an orange out of every piece of information our customers enter and extrapolates to every single user, thus, it can provide them heaps of analytics about their lives and build very accurate forecasts. "

Why do I blog this? I am not interested at all in the "intelligence" freakiness nor in the idea to have a system that analyze my financial data. However, I found intriguing the tracking bit and the affordance+interface to support it. It's funny how they brand their product as something related to role plying games ("here are dozens of millions of people who spend a lot of time and money playing MMORPGs (Massive Multiplayer Online Role Playing Games), but... aren't our REAL LIVES much better RPGs?")... and then extract what they think is the most important point in MMORPG (tracking one's progress) to provide a service for "real life".

What can be pertinent is to see how they tie-in all the data generated by individuals (money, health), would there be a possibility to do that for objects?

Mobiscopes

Vlad sent me this paper yesterday: T. Abdelzaher, Y. Anokwa, P. Boda, J. Burke, D. Estrin, L. Guibas, A. Kansal, S. Madden, and J. Reich, Mobiscopes for Human Spaces, IEEE Pervasive Computing - Mobile and Ubiquitous Systems, Vol. 6, No. 2, April - June 2007 The authors describe the notion of "mobiscope", i.e. mobile sensor networks:

"a mobiscope is a federation of distributed mobile sensors into a taskable sensing system that achieves high-density sampling coverage over a wide area through mobility. (...) physically coupled to the environment through carriers, including people and vehicles. (...) Mobiscopes will be tightly coupled with their users. This presents significant human-factors design challenges and many sociocultural implications that extend beyond limited notions of privacy in data transmission and storage. (...)Mobiscopes are part of our maturing ability to silently watch ourselves and others"

Why do I blog this? documenting new terms about sensor technologies.

Glowbots: robot-based evolved relationship

Glowbots is a very intriguing project by Mattias Jacobsson, Sara Ljungblad, Johan Bodin and Jeffrey Knurek (Future Applications Lab, Viktoria Institute).

"GlowBots are small wheeled robots that develop complex relationships between each other and with their owners. They develop attractive patterns that are affected both by user interaction and communication between the robots. (...) In the current GlowBots system, when users gently pick up or put their hands around the GlowBots, they react immediately and visibly by producing new patterns on the display. The user can affect the new pattern by actuating the various sensors with sound or light. When the GlowBot is reintroduced to its robot colleauges, it starts to mingle with them and share its new pattern. The other robots are affected by it and start to evolve their own patterns and share them with their neighbors in turn. To observers, the effect is like sowing a seed that spreads among the robot population as they move around."

What is interesting is the way the project has been carried out:

"The project began with a series of interviews with people who have unusual pets. Interview results were dissected into categories and recombined to form distinct, intrinsic clusters of characteristics. These clusters were used as raw material for four personas, one of which revealed real-world attributes of a man who owns an unusual pet: (1) He does not pet his pets, nor is he interested in the pets' distinct personalities, (2) He is interested in breeding his pets to create nice patterns, (3) He enjoys reading about his pets and often meets up with people who have similar pets, to admire or even exchange pets."

Why do I blog this? I am interested in both the methodology and the outcomes. It seems to be very interesting in terms of the robots-ubicomp convergence; very well connected to the notion of history of interactions as an enabler of new relationships to objects.

Julian Bleecker on WMMNA

There is a very dense and relevant interview of Julian Bleecker on WMMNA. The range of topics described there is amazing, some excerpts I like and can be taken as "seed content" of the near future laboratory:

"If the project of the digital age is to make everything that we have in "1st life" available in 2nd life, then I think we're on the wrong path. Laminating 1st life and 2nd life isn't about creating digital analogs. It's about elevating human experience in simple and profound ways. (...) Finding compelling ways to make 1st life legible and meaningful in 2nd life is probably one of the most fascinating, provocative experiments of the digital networked era. (...) to find experimental vectors that move towards a set of experiences and provocations that link 1st life and 2nd life so that there is a kind of effortless divide, so that it is possible to occupy both simultaneously. Not by having a connected phone so that you're wandering down a gorgeous, baroque alley in Vienna while staring at your mobile screen, trying to get Google Maps to figure out where you are — I imagine something much more translucent and less literal. (...) The kinds of experiments that will help us imagine and create a more habitable, playful world are far more provocative than what anyone trying to make their quarterly numbers will offer. These experiments must question conventional assumptions, find ways to encourage and appreciate whimsy, and recognize that pragmatism got us the world we have now. (...) The kinds of experiments I do are ones that tell stories about worlds that may be, or world's I wouldn't mind occupying myself, or cautionary stories about possible near futures that make me nervous."

And this leads Julian to describe a current project we have (Julian and I):

"turn critters — specifically, in this case, a pet dog — into interaction partners in digital worlds. We're imagining what a near-future would be like if the partners with whom we interacted were the other occupants of our world, such as pets. We're making a dog toy for a friend's one-eyed dog that will manipulate the actions of a Dwarf in World of Warcraft. So there you would have a somewhat playful provocation — pets playing in World of Warcraft. The experiment is less about actually creating a pet playable version of that very complex online game — we don't suppose for a minute that a dog can play World of Warcraft in the sense of pursuing the goals of the game or comprehending the through-line. But certainly a dog can control a WoW character to the same degree that they can control and manipulate a favorite rag-doll chew toy. This is a test balloon, floated to begin imagining-through-construction a context of participation between pets and humans in the new networked age."

Why do I blog this? well this not surprising I totally agree with Julian's criticisms and discourse about the hybridation of material/digital environment, 2nd lives and stuff like that; this is essentially things we discussed and we feel as important to push further. The pet dog in World of Warcraft is one of these experiments that might timely lead to presentations (and eventually a report). But, what is important before is maybe to make it more articulate, so let's wait a bit.

"Hybrid World Lab" workshop

People interested in the hybridation between the material world and digital representations (virtual environments? second lives?) might check the Mediamatic workshop called Hybrid World Lab. The event is scheduled for May 7-11 in Amsterdam.

Mediamatic organizes a new workshop in which the participants develop prototypes for hybrid world media applications. Where the virtual world and the physical world used to be quite separated realms of reality, they are quickly becoming two faces of the same hybrid coin. This workshop investigates the increasingly intimate fusion of digital and physical space from the perspective of a media maker.Some of the topics that will be investigated in this workshop are: Cultural application and impact of RFID technology, internet-of-things. Ubiquitous computing (ubicomp) and ambient intelligence: services and applications that use chips embedded in household appliances and in public space. Locative media tools, car navigation systems, GPS tools, location sensitive mobile phones. The web as interface to the physical world: geotagging and mashupswith Google Maps & Google Earth. Games in hybrid space.

(Picture Fused Space - SKOR - Wachtmeister 2)

(Picture Fused Space - SKOR - Wachtmeister 2)

Why do I blog this? I am going to be a trainer/lecturer at that workshop, along with Timo and Matt Adams. Right on spot on some current near future laboratory explorations! It's going to be a good opportunity to gather some thoughts and work on them with people. People interested can have a lookhere and register there.

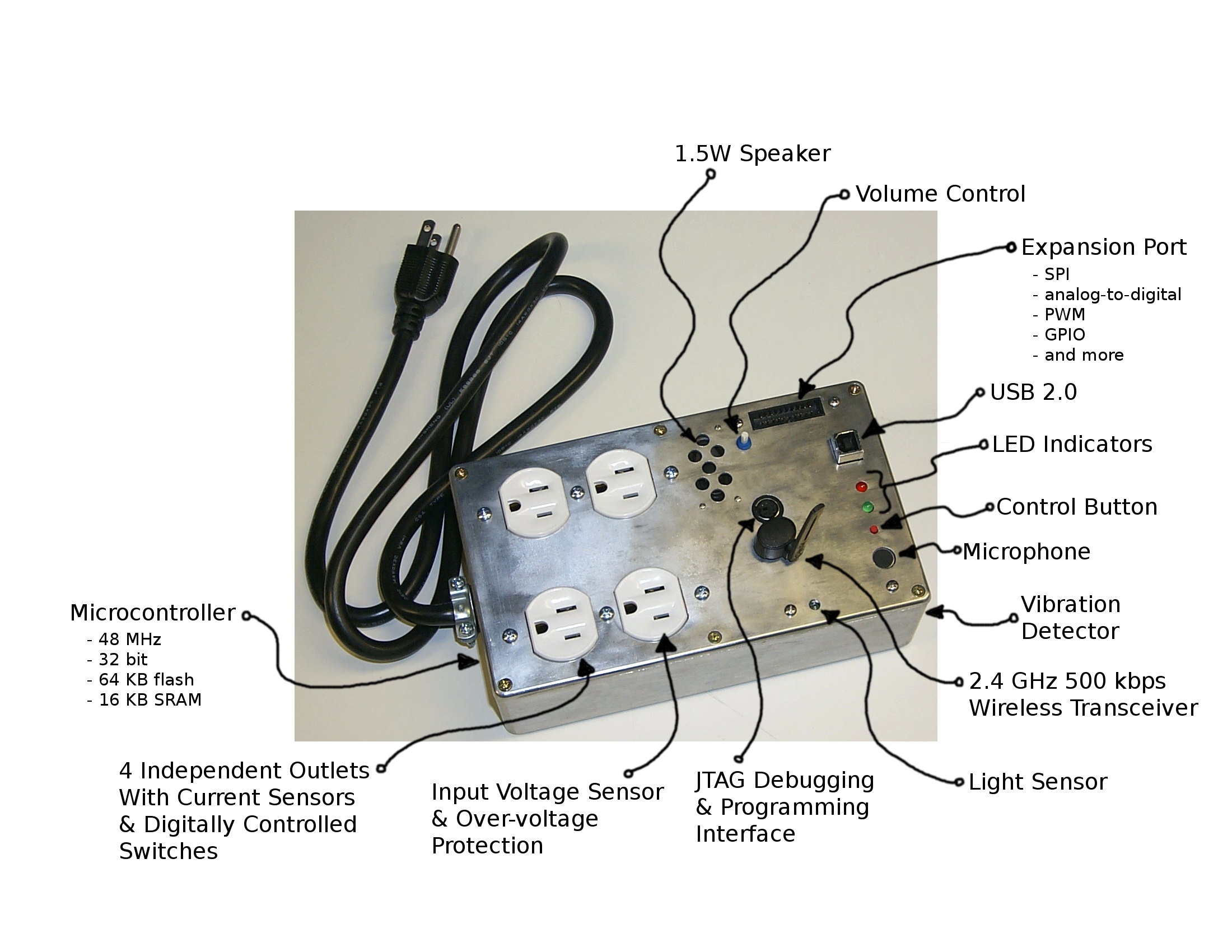

A very curious plug

(Via j*b), this Plug project by Josh Lifton. He defines it as "A power strip imbued with sensing, computational, and communication capabilities in order to form the backbone of a sensor network". The artifact looks pretty nifty:

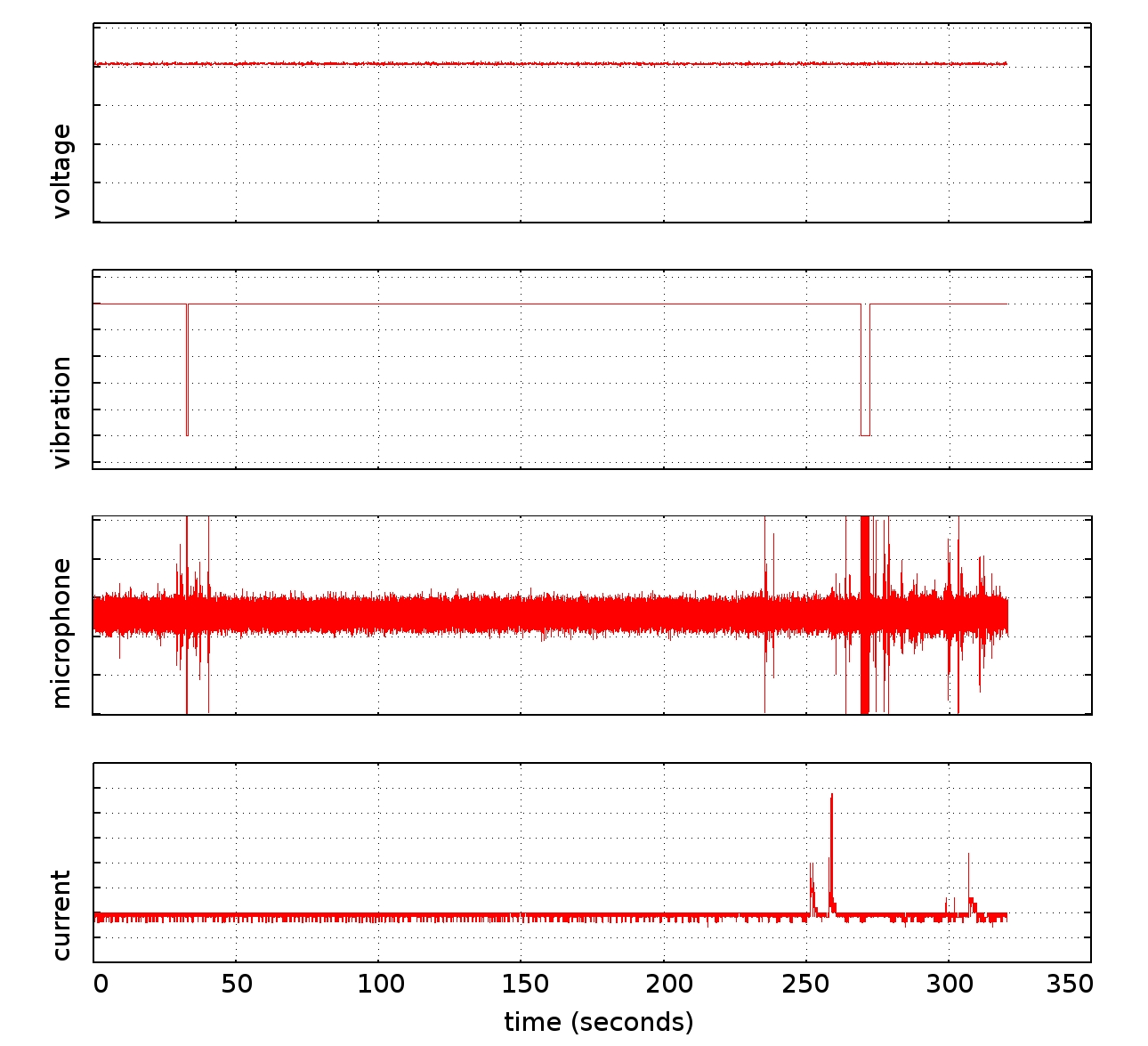

An example of data that can be extracted is presented:

The text that follows on their website describes the picture in a more comprehensive way:

"Data taken from the snack vending machine on the third floor of the Media Lab the night before TTT. There three distinct events. First, someone walks by and hits the machine (see vibration graph). Second, two people in conversation buy a snack using a dollar bill. Third, the change from the dollar bill is used to buy the same snack. For each purchase, there are two current spikes, one for the exchange of money and one for the actuation needed to dispense the snack. Conversation ensues throughout. Note that the current and voltage plots are low-passed, peaked versions of the actual AC measurements. All data have been scaled appropriately for ease of viewing."

Why do I blog this? understanding the story behind data/interactions, that's curious and very apropos when thinking about the hybridation between 1st life and 2nd lives.

A kirkyan

When talking about connections between the material world and digital environments, terminologies are still fuzzy, a kirkyan is a new word coined by csven:

"A kirkyan is similar to a spime or blogject, but different in that a kirkyan is actually a Thing comprised of a combination of reality instances. One instance exists in our physical world (kirkyan P) and one or more “sibling” instances exist in their respective, independent virtual worlds (kirkyan V1′, V2′, V3′, aso). They are independent yet part of a whole. (...) Kirkyans are not “virtual objects first and actual objects second” in the literal sense. It is not necessarily a product of CAD/CAM. A kirkyan might start as a sculpted ceramic piece with embedded firmware and then be three-dimensionally scanned, with all data representing the physical instantiation, kirkyan P, then used to create the transreality sibling(s). A physical replacement would, however, be replicated through a network-controlled process; most likely additive. Consequently, kirkyans do not necessarily begin as data (...) Each instantiation carries the “DNA” with which to create the other(s). Each carries the history and learnings of the other(s), so that when one expires, an evolved version can replace it; created from the data stored in the transreality sibling(s).A kirkyan can be a blogject, with the physical instantiation involved in the physical world as an interactive component of a network that includes the virtual instance(s). Additionally, each instantiation of a kirkyan independently has most of the qualities of a spime"

Why do I blog this? kind of late reading and parsing csven great blogposts about transreality.

Early instances of 1st/2nd life connections and intersections

Like V-migo, Teku Teku Angel allows the user to take advantage of his/her movements in the physical environment to make grow a a virtual pet (in the form of an angel) on the Nintendo DS. It's a pedometer that measure the daily steps and turn them into a mean to make an heavenly creature evolving.

As described on Gizmodo, Otoizm is an impressive product presented at the Toy Forum 2006 in Tokyo. Basically, connected to a music player, this device embeds a tamagotchi-like character that grows according to the genre of music you listen to and memorize phrases or compose tunes. It also has multi-user capabilities: when connected the characters dance with each other.

So, why this is important? simply, it's another kind of application that engage users in a first life/second life experience. Unlike Teku Teku Angel that is based on a physical experience, Otoizm rather work as the intersection between digital experiences: the intricate relationship between the virtual pet and the music in the form of digital bits. What is missing is an application that would take advantage of both.