(Special fav session at LIFT Asia 2008 this morning since this topic is linked to my own research, my quick notes)

Adam Greenfield's talk "The Long Here, the Big Now... and other tales of the networked city" was the follow-up of his "The City is Here for You to Use". Adam's approach here was "not technical talk but affective", about what does it feel to live in networked cities and less about technologies that would support it. The central idea of ubicomp: A world in which all the objects and surfaces of everyday life are able to sense, process, receive, display, store, transmit and take physical action upon information. Very common in Korea, it's called "ubiquitous" or just "u-" such as u-Cheonggyecheong or New Songdo. However, this approach is often starting from technology and not human desire.

Adam's more interested in what it really feels like to live your life in such a place or how we can get a truer understanding of how people will experience the ubiquitous city. He claims that that we can begin to get an idea by looking at the ways people use their mobile devices and other contemporary digital artifacts. Hence his job of Design Director at Nokia.

For example: a woman talking in a mobile phone walking around in a mall in Singapore, no longer responding to architecture around her but having a sort of "schizeogographic" walk (as formulated by Mark Shepard). There is hence "no sovereignty of the physical". Same with people in Tokyo or Seoul's metro: physically there but on the phone, they're here physically but their commitment is in the virtual.

(Oakland Crimespotting by Stamen Design)

(Oakland Crimespotting by Stamen Design)

Adam think that the primarily conditions choice and action in the city are no longer physical but resides in the invisible and intangible overlay of networked information that enfolds it. The potential for this are the following:

- The Long here (named in conjunction with Brian Eno and Steward Brand's "Long Now"): layering a persistent and retrievable history of the things that are done and witnessed there over anyplace on Earth that can be specified with machine-readable coordinates. An example of such layering experience on any place on earth is the Oakland Crimespotting map or the practice of geotagging pictures on Flickr.

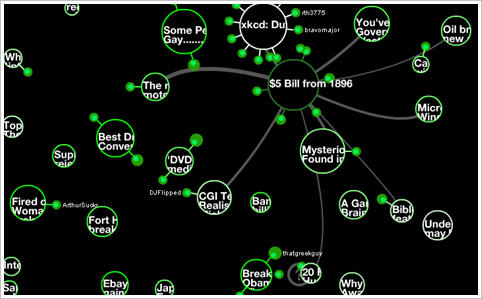

- The Big Now: which is about making the total real-time option space of the city a present and tangible reality locally AND, globally, enhancing and deepening our sense of the world’s massive parallelism. For instance, with Twitter one can get the sense of what happens locally in parallel and also globally. You see the world as a parallel ongoing experiment. A more complex example is to use Twitter not only for people but also for objects, see for instance Tom Armitage's Making bridges talk (Tower Bridge can twitter when it is opening and closing, captured through sensors and updated on Twitter). At MIT SENSEeable City, there is also this project called "Talk Exchange" which depicts the connections between countries based on phone calls.

Of course, there are less happy consequences, these tech can be used to exclude, what Adam calls the "The Soft Wall": networked mechanisms intended to actively deny, delay or degrade the free use of space. Defensible space is definitely part of it as Adam points out Steven Flusty's categories to describe how spaces becomes: "stealthy, slippery, crusty, prickly, jittery and foggy". The result is simply differential permissioning without effective recourse: some people have the right to have access to certain places and others don't. When a networked device does that you have less recourse than when it's a human with whom you can argue, talk, fight, etc. Effective recourse is something we take for granted that may disappear.

We'll see profound new patterns of interactions in the city:

- Information about cities and patterns of their use, visualized in new ways. But this information can also be made available on mobile devices locally, on demand, and in a way that it can be acted upon.

- Transition from passive facade (such as huge urban displays) to addressable, scriptable and queryable surfaces. See for example, the Galleria West by UNStudio and Arup Engineering or Pervasive Times Square (by Matt Worsnick and Evan Allen) which show how it may look like.

- A signature interaction style: when information processing dissolving in behavior (simple behavior, no external token of transaction left)

The take away of this presentation is that networked cities will respond to the behavior of its residents and other users, in something like real time, underwriting the transition from browse urbanism to search urbanism. And Adam's final word is that networked cities's future is up to us, that is to say designers, consumers, and citizens.

Jef Huang: "Interactive Cities" then built on Adam's presentation by showing projects. To him, a fundamental design question is "How to fuse digital technologies into our cities to foster better communities?". Jef wants to focus on how digital technology can augment physical architecture to do so. The premise is that the basic technology is really mature or reached a certain stage of maturity: mobile technology, facade tech, LEDs, etc. What is lacking is the was these technologies have been applied in the city. For instance, if you take a walk in any major city, the most obvious appearance of ubiquitous tech are surveillance cameras and media facades (that bombard citizen with ads). You can compare them to physical spam but there's not spam filter, you can either go around it, close your eyes or wear sunglasses. You can compare the situation to the first times of the Web.

When designing the networked cities, the point is to push the city towards the same path: more empowered and more social platforms. Jef's then showed some projects along that line: Listening Walls (Carpenter Center, Cambridge, USA), the now famous Swisshouse physical/virtual wall project, Beijing Newscocoons (National Arts Museum of China, Beijing) which gives digital information, such as news or blogposts a sense of physicality through inflatable cocoons. Jef also showed a project he did for the Madrid's answer to the Olympic bid for 2012: a real time/real scale urban traffic nodes. Another intriguing project is the "Seesaw connectivity", which allows to learn a new language in airport through shared seesaw (one part in an airport and the other in another one).

The bottom line of Jef's talk is that fusing digital technologies into our cities to foster better communities should go beyond media façades and surveillance cams, allow empowerment (from passive to co-creator), enable social, interactive, tactile dimensions. Of course, it leads to some issues such as the status of the architecture (public? private?) and sustainability questions.

The final presentation, by Soo-In Yang, called "Living City", is about the fact that buildings have the capability to talk to one another. The presence of sensor is now disappearing into the woodwork and all kinds of data is transferred instantly and wirelessly—buildings will communicate information about their local conditions to a network of other buildings. His project, is an ecology of facades where individual buildings collect data, share it with others in their "social network" and sometimes take "collective action".

What he showed is a prototype facade that breathes in response to pollution, what he called "a full-scale building skin designed to open and close its gills in response to air quality". The platform allows building to communicate with cities, with organizations, and with individuals about any topic related to data collected by sensors. He explained how this project enabled them to explore air as "public space and building facades as public space".

Yang's work is very interesting as they design proof of concept, they indeed don't want to rely only on virtual renderings and abstract ideas but installed different sensors on buildings in NYC. They could then collect and share the data from each wireless sensor network, allowing any participating building (the Empire State Building and the Van Alen Institute building) to talk to others and take action in response. In a sense they use the "city as a research lab".

Writing a chapter about geolocation history, I am digging the issue of GPS accuracy as it is often a "pain points" in the user (driver) experience. The Road Measurement Data Acquisition System has an interesting paper about it, by Chuck Gilbert.

Writing a chapter about geolocation history, I am digging the issue of GPS accuracy as it is often a "pain points" in the user (driver) experience. The Road Measurement Data Acquisition System has an interesting paper about it, by Chuck Gilbert.

(Oakland Crimespotting by Stamen Design)

(Oakland Crimespotting by Stamen Design)

(Picture of Stamen's Digg swarm visualization)

(Picture of Stamen's Digg swarm visualization) (Picture of Stamen's Cabspotting)

(Picture of Stamen's Cabspotting)