This morning, Jeffrey Huang gave a very interesting talk at the classroom of the future workshop. It was about lessons and challenges regarding new types of environments that would benefit from technologies to connect people from different places. My raw notes are below:

lessons learned from connected classrooms

2 examples

- swisshouse project (2000-2010): to address brain drain, a network of 20 buildings in strategic lcoations, to transfer knowledge back in switzerland

- digial agora: 4 buildings (washington, naplio, alexandria, callisto: a boat): a structure to facilitate seminars of the harvard center for hellenic studies

in both projects, architecture is an interface

using walls, ceilings... to connect

this idea is not new, already in the 17th century: athanasius kirchner (1650): walls and ceilings as "interfaces", you can stand next to a statue and eavesdrop conversation or spread secret by whispering secrets to the wall

another example: 1964 Eames' IBM pavilion (to show the progress of IBM at the time, the building was a communication vector)

today: it's much easier to do it, these interfaces have become smaller and more powerful

how to embed this tech in architecture

design principles used to create those spaces

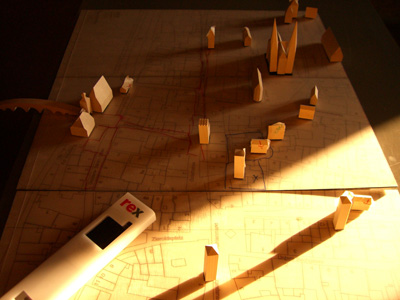

- hardware component: modular system with basic shells to accomodate different configurations + plug and play module. In the swisshouse, the floor is the infrastructure in which they plug walls

- software: building OS (operates the i/o devices: light, audiovisual...) and application layers (ambient, artifact, people). Ambient layer = what is part of the wall, there are lots of dispays. Artifact layer = where you display artifact, flat for example on tables. People layer = the way to bring remote people into the space, LCD screens on rollers.

4 key challenges:

- Different ways of knowledge transfer: how to go beyond the traditional lectures and passive behavior: this is achieved through a different spaces: knowledge cafés, digital wall (for more traditional lectures and peesentations), arenas (step down spaces for intimate debate) + curtains to reduce noise.

- Different levels of presence: problem that you have when people are remote... schedule remote presentations... lack of copresence sense, translucent presence... awareness of others, design a presence that is more gradual. Always-on video that connect spaces (and not people).... virtual cocktail after the lecture in both Boston and Zurich. RFID reader to register physical visitor in order to know who is where (Swatch watch with rfid tag). Viz of who is where

- Adaptive usage and future: trading flexibility and coherence... accomodate different knowledge transfer scenarios, can evolve over time versus obsolescence, adapat architecture in real time through software driven cutomization. For example: the glasswall has not technology in it, you can replace it. Part of the design of the 2 projects are 50% about software: different interactive wallpapers. Microphones in certain locaiton that capture conversations and represent them on walls ("sediments of thoughts"), chat on a wall, tangible and playful wall.

- Beyond the desktop: choreographing connectivity: coordination of multiple displays and multiple inputs, pervasiveness of mapping (superimpose versus invent new elements), layered approach: context defines content (ambient, artifact, people layers), "tangible" interfaces. Pinwheels that generate wind depending where people are present (if people are in asia...).

no scientific studies of the results yet

lessons learned form first nodes so far:

(+) community creation capacity - events, rituals

informality, spontaneous interactions

adaptivity of walls

(-) acoustic transparency versus visual transparency (in the end people just want visual transp and not acoustic transp.)

connectivity (most of the actions are only local but this is due that are just 1 node with a digital agora... it's like if only one person had a fax machine)

Q&A:

Stefano Baraldi: how people learn to use that space?

Jef: there is a tech person that set and maintain this stuff so visitors do not have to learn, people intuitively interact there. Because things should work similarly.

Why do I blog this? I liked the approach and the discussion about key challenges to have augmented environments. Besides, the infrastructure is a very well thought with less technology in the environment (it's easy to remove elements such as walls) and rather use software components.

More about this:

Huang, J. and Waldvogel, M. (2004). The swisshouse: an inhabitable interface for connecting nations, Symposium on Designing Interactive Systems archive

Proceedings of the 2004 conference on Designing interactive systems: processes, practices, methods, and techniques, pp. 195 - 204.

The

The

Why do I blog this? because the design brief is interesting and reflect preoccupations that are of interest with regards to pervasive environment controller, such as magic wands. I'd be curious to see the results.

Why do I blog this? because the design brief is interesting and reflect preoccupations that are of interest with regards to pervasive environment controller, such as magic wands. I'd be curious to see the results.

Here is what they found using this technique:

Here is what they found using this technique: